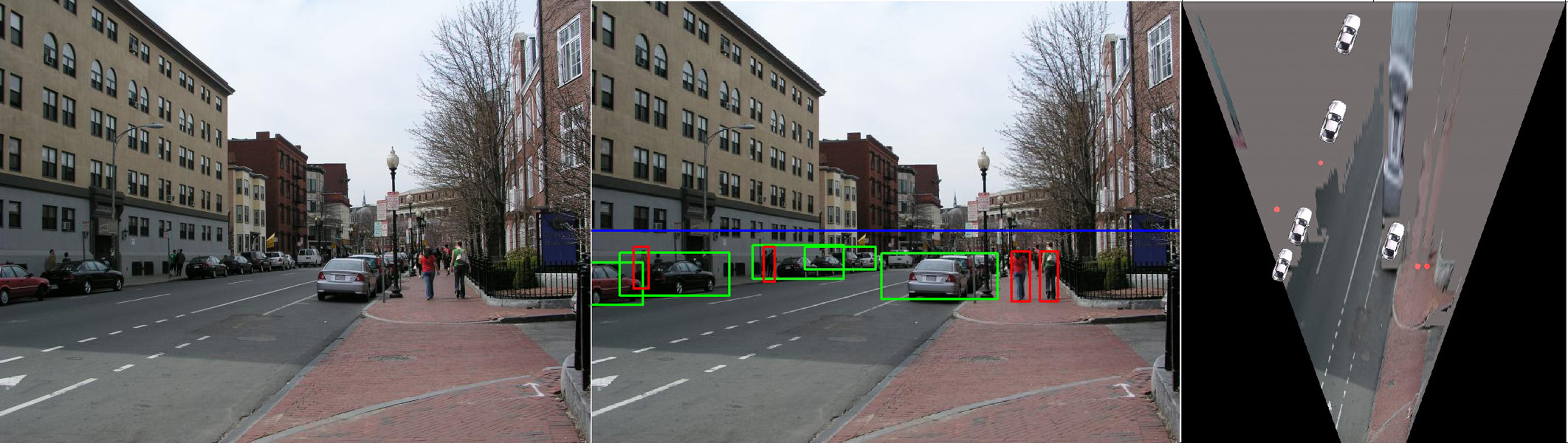

Image understanding requires not only individually estimating elements of the visual world but also capturing the interplay among them. We provide a framework for placing local object detection in the context of the overall 3D scene by modeling the interdependence of objects, surface orientations, and camera viewpoint.

Most object detection methods consider all scales and

locations in the image as equally likely. We show that with

probabilistic estimates of 3D geometry, both in terms of

surfaces and world coordinates, we can put objects into

perspective and model the scale and location variance in

the image. Our approach reflects the cyclical nature of the

problem by allowing probabilistic object hypotheses to refine geometry and vice-versa. Our framework allows painless substitution of almost any object detector and is easily extended to include other aspects of image understanding.

This work the latest of an on-going effort in Geometrically Coherent Image Interpretation. In our SIGGRAPH'05 paper Automatic Photo Pop-up, we show how to construct simple "pop up" 3D models from a single image. In our ICCV'05 paper Geometric Context from a Single Image, we provide a quantitative analysis of our system and extend our work by subclassifying vertical regions and using the geometric labels as context for object detection.

Translations are available in Latvian and Slovak (will not add links to new translations).

Dataset

Data is available here.

Publications

D. Hoiem, A.A. Efros, and M. Hebert, "Putting Objects in Perspective", to appear in CVPR 2006.

pdf